Making our Laravel test suite ready for parallel testing

Published on February 1, 2021 by Freek Van der Herten

To make sure that our service is working correctly, we have a suite of automated tests. The test suite is executed when we make changes and deploy something to production.

Using the new parallel testing feature that recently landed in Laravel, we managed to run our testsuite about four times faster.

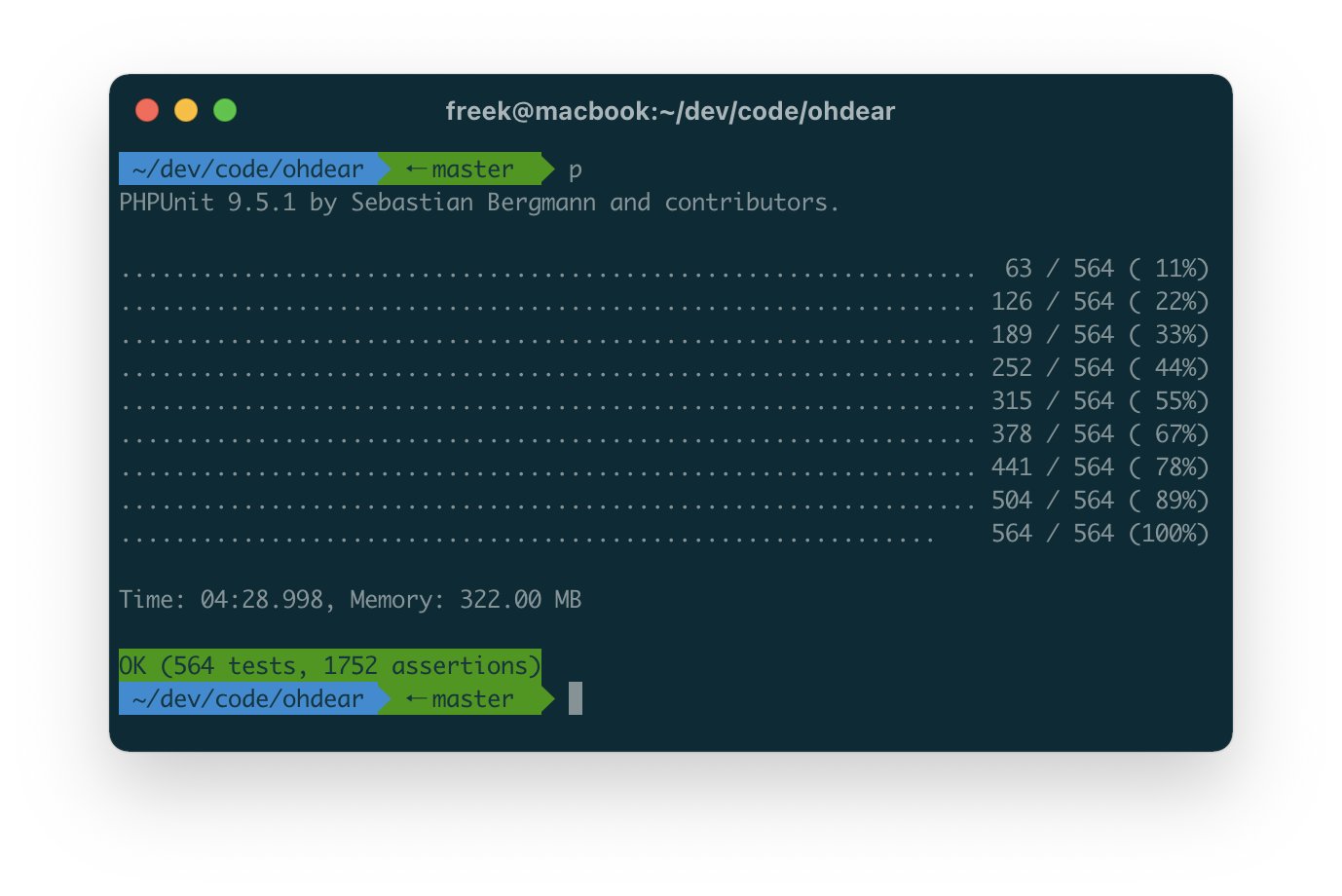

Here is the test output when running all tests sequentially. The time needed is 4m28s.

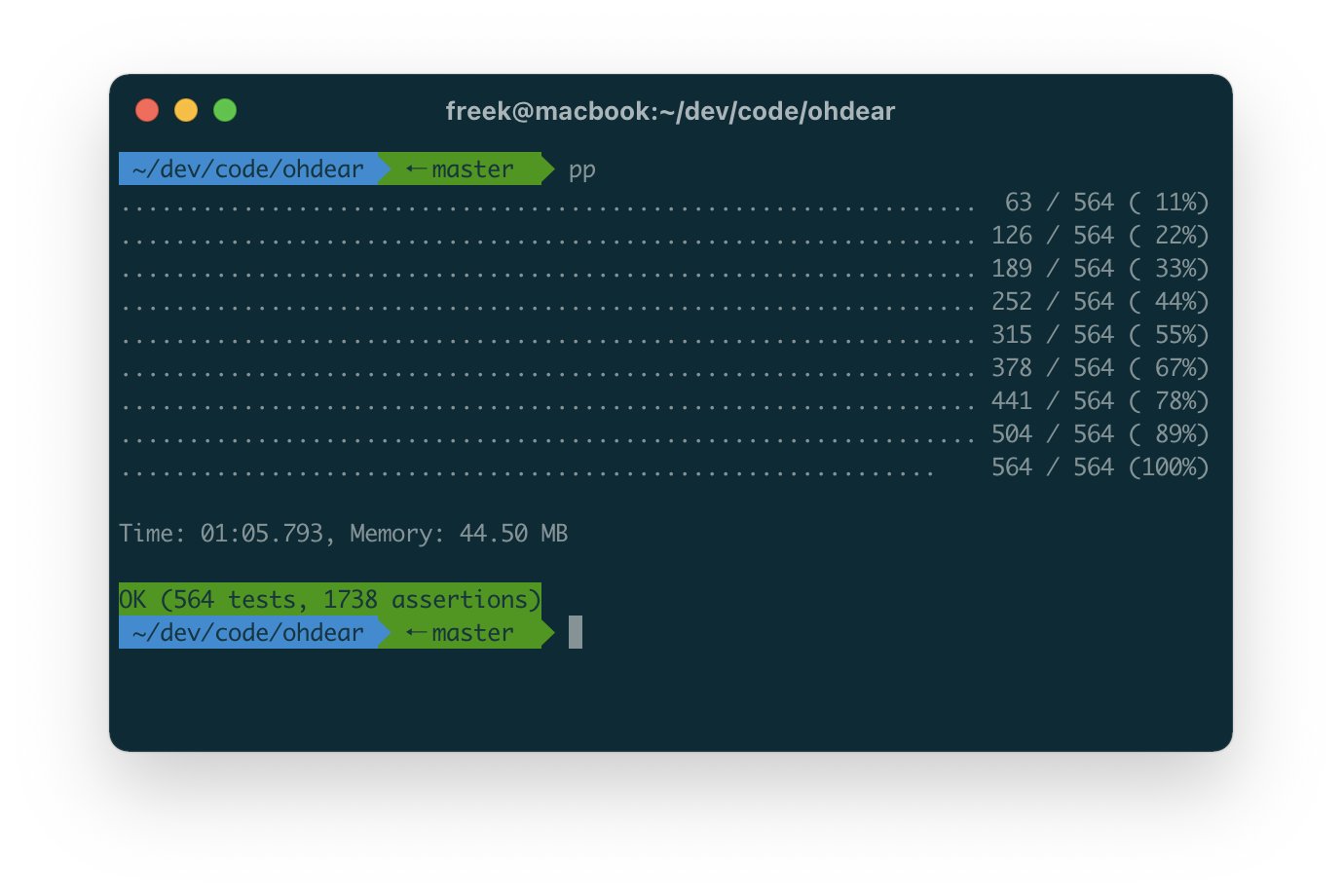

And here's the test output when using parallel testing. It only took 1m05s.

In this blogpost you'll learn what parallel testing is, and what changes we needed to make to our tests to make use of this awesome feature.

What is parallel testing? #

By default, PHPUnit runs each test in our suite sequentially. A test will only start if a previous one has finished. While this has the advantage of being a very simple strategy, it's not an optimal approach.

Most of our tests make use of the database. At the start of each test, the database is cleared. At the end of the test we verify if the expected results are written in the db. Now, if multiple tests were running at the same time, we could get unexpected results, as the a test would also see the changes in the db made by other tests.

In the latest version of Laravel, you can run tests in parallel by using the parallel option when running tests.

php artisan test --parallel

Under the hood Laravel will leverage ParaTest by Brian Scaturro to run multiple PHPUnit processes concurrently. Most of the heavy work for parallel testing is performed in ParaTest. By default, ParaTest will create one test process per core in your computer.

The parallel testing additions in Laravel also handle the creationg and migration of databases. Per PHPUnit process, Laravel will automatically create and configure a database, so each test still can use an isolated database. If your computer has 4 cores, then 4 databases will be created, and Laravel will make sure that each database is only used by 1 test at a given time.

Additional changes to our test suite #

It's really nice that Laravel takes care of making sure each test has its own database to work with. However, the are also other resource that, when used concurrently, can mess up the assertions a test makes. Let's dive in two concrete examples in the Oh Dear test suite.

Checking contents of mails in parallel tests #

Oh Dear sends several emails to all users. In our tests we make sure that those emails work correctly. One of those mails is the uptime report we send in the beginning of every month.

In Laravel you can fake all mails. This allows you to assert that it was sent, and who it was sent to. Mails won't actually be sent or rendered, so you can't make assertions on the content. To test the content of mails, Laravel provides a few handy assertion methods on a Mailable.

In Oh Dear we use both methods, but we also use a third approach. Using the spatie/laravel-mail-preview package, each mail will not be sent, but be written to disk. This allows us to make assertions against those written mails. In our tests we can just call code that sends mails, there's no need for Mail::fake() or having to hold an instance of Mailable in the test.

Here's a concrete example. In this test we call an Artisan command that sends mails, and we assert that the right content is in the sent mail.

/** @test */ public function it_can_report_the_uptime_statistics() { Site::factory()->create(['url' => 'https://spatie.be']); // will seed uptime history records with 99.98% uptime $this->generateUpAndDownTime(); TestTime::addMonth(); $this->artisan('ohdear:email-monthly-uptime-reports'); $this ->assertLatestSentMailContains("spatie.be") ->assertLatestSentMailContains("99.98%"); }

Here's the implementation of that assertLatestSentMailContains method. It will find the latest sent mail, and make an assert if the passed substring is in the content of the mail.

protected function assertLatestSentMailContains(string $substring): self { $mailStorageDirectory = config('mail-preview.storage_path'); $sentMails = collect(File::allFiles($mailStorageDirectory)); $latestMailPath = $sentMails ->sortByDesc(fn(SplFileInfo $file) => $file->getMTime()) ->first() ->getPathName(); $latestMailContent = file_get_contents($latestMailPath); $this->assertStringContainsString($substring, $latestMailContent); return $this; }

If all our test run sequentially our tests run just fine. Using parallel testing, this tests, and all others where assertLatestSentMailContains start to fail.

Why? Because all those tests write mails in the same directory, so the latest sent mail could have been sent in the code from another test.

This can be fixed by using a separate directory per test process. When using parallel testing, the ParallelTesting::token() method will return a token that is guaranteed to be unique for all concurrently running tests. In the setUp method in our base TestCase is performed before each test. In that setUp method we're calling this new method configureMailPreviewDirectory().

protected function configureMailPreviewDirectory(): void

{

$previewMailDirectory = 'storage/email-previews-' . ParallelTesting::token();

config()->set('mail-preview.storage_path', $previewMailDirectory);

if (File::exists($previewMailDirectory)) {

File::deleteDirectory($previewMailDirectory);

}

File::makeDirectory($previewMailDirectory);

}

In the configureMailPreviewDirectory we will ensure an empty directory exists per value that the testing token returns. We will also dynamically change the configuration of the laravel-mail-preview package so that directory is used to save sent mails of a particular test.

Preparing the internal test server for parallel tests #

The second place where we had to make some changes to make parallel test work, is on test test server that is booted within our tests. This way of testing is not that common, but I wanted to mention it here for those of you interested how tests specific to Oh Dears core functionality look like.

Oh Dear can checks if a site is up, if there are broken links on a page of site, and much more. To speed up some of the tests, we're not using a real world site to check against.

Instead, our tests suite starts a Lumen based web server. Here's the content of one of the tests where are Lumen server is used.

protected function setUp(): void { parent::setUp(); $this->check = Check::factory()->create([ 'type' => CheckType::BROKEN_LINKS, 'site_id' => Site::factory()->create([ 'url' => 'http://localhost:8181', 'broken_links_check_include_external_links' => true, ]), ]); $this->run = Run::createForCheck($this->check); Server::boot(); Event::fake(); } /** @test */ public function it_can_perform_a_run_and_detect_broken_links() { Server::activateRoutes('brokenLinks'); app(CrawlerChecker::class)->perform([$this->run]); $this->run->refresh(); $this->assertEquals(7, $this->run->crawledUrlsForBrokenLinks()->count()); $brokenLinks = $this->run->brokenLinks()->toArray(); $this->assertEquals([ [ 'crawled_url' => 'https:::///invalidLink', 'status_code' => null, 'found_on_url' => 'http://localhost:8181/', ], [ 'crawled_url' => 'http://localhost:8181/broken', 'status_code' => 404, 'found_on_url' => 'http://localhost:8181/link3', ], [ 'crawled_url' => 'https://ohdearthisdomaincertainlydoesntexist', 'status_code' => null, 'found_on_url' => 'http://localhost:8181/', ], ], $brokenLinks); Event::assertDispatched(BrokenLinksFound::class); Event::assertNotDispatched(BrokenLinksFixed::class); }

There's a lot going on in the code above.

In short, this test will instruct our Server (this is our lumen test server) to load up the brokenLinks routes files. This route file contains routes whose responses contain a couple of broken links.

A check being performed is called a run in Oh Dear. In the test above we are going to let our Crawler checker perform a run of type CheckType::BROKEN_LINKS. After the check is done, we can assert that the expected number of pages was crawled, and that broken links were found.

Let's take a look at the code of the Server class. It's a lot of code, but don't be scared. This code will:

- run

composer installto install the server's dependencies if they are not install yet. - start up the Lumen server in the background, it can also wait until the boot process is complete

- shuts down the server after the test has run

- provide a method to dynamically change the route file used by the Lumen app

use ErrorException; use GuzzleHttp\Client; class Server { protected Client $client; public function __construct(Client $client = null) { static::boot(); $this->client = $client ?? new Client(); } public static function boot() { if (! file_exists(__DIR__.'/vendor')) { exec('cd "'.__DIR__.'"; composer install'); } if (static::serverHasBooted()) { return; } $startServerCommand = 'php -S '.rtrim(static::getServerUrl(), '/').' -t ./tests/Server/public > /dev/null 2>&1 & echo $!'; $pid = exec($startServerCommand); while (! static::serverHasBooted()) { sleep(1); } register_shutdown_function(function () use ($pid) { @exec("kill {$pid} 2>/dev/null"); }); } public static function getServerUrl(string $endPoint = ''): string { return 'localhost:'.getenv('TEST_SERVER_PORT').'/'.$endPoint; } public static function serverHasBooted(): bool { $context = stream_context_create(['http' => [ 'timeout' => 1, ]]); try { $result = file_get_contents('http://'.self::getServerUrl('booted'), false, $context) != false; } catch (ErrorException) { $result = false; } return $result; } public static function activateRoutes(string $routeConfiguration) { $token = ParallelTesting::token(); file_put_contents(__DIR__ ."/public/config-{$token}.json", json_encode(['routes' => $routeConfiguration])); } }

In the activateRoutes you see that we now use the ParallelTesting::token(). The method will use that token to write a config file per concurrent test that contains the route configuration to use. Before we supported parallel testing we only had one config file.

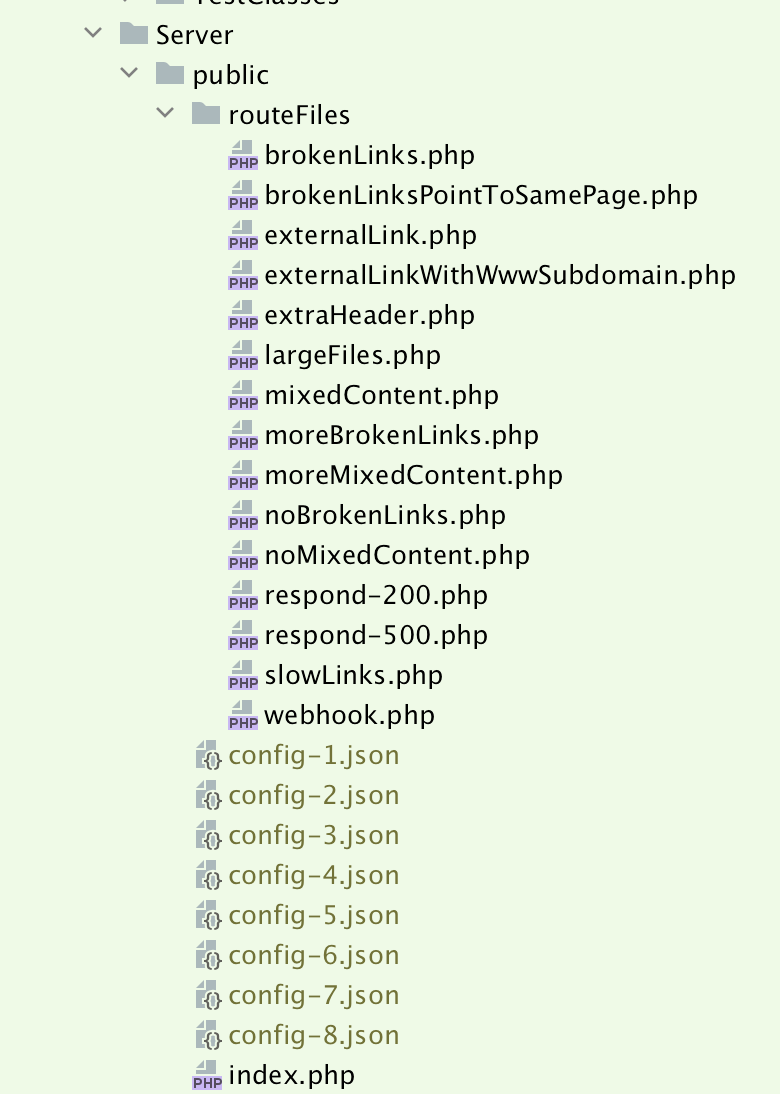

Here's how the entire Server directory looks like in our tests looks like. Those grayed out files are not under version control (as they are generated). You can see that we have config files per test token (I have 8 cores on my development machine).

The index.php file contains the entire Lumen server setup. Let's take a look.

use Laravel\Lumen\Http\Request; require_once __DIR__.'/../vendor/autoload.php'; putenv('APP_DEBUG=true'); $app = new Laravel\Lumen\Application( realpath(__DIR__.'/../') ); $app->configure('app'); $app->router->group([ 'namespace' => 'App\Http\Controllers', ], function ($router) use ($app) { $router->get('booted', fn () => 'app has booted'); if ($token = Request::createFromGlobals()->header('testtoken')) { $routesFile = routesFileForToken($token); require $routesFile; } }); $app->run(); function routesFileForToken(string $token): string { $configFile = __DIR__ . "/config-{$token}.json"; $config = json_decode(file_get_contents($configFile), true); $routesFile = __DIR__ . "/routeFiles/{$config['routes']}.php"; return $routesFile; }

In the code above you see that we use the value in the testtoken header to determine which of the config files should be read.

In our CrawlerChecker class we added this piece of code in the function that determines the headers to be used.

if (app()->environment('testing')) {

$defaultHeaders['testtoken'] = ParallelTesting::token();

}

Now you've seen everything that makes this work.

Let's summarise: in our it_can_perform_a_run_and_detect_broken_links test we use Server::activateRoutes('brokenLinks'); which configures the internal Lumen server responses with routes/responses that are in the brokenLinks routes files. This configuration is written to a file that contains the test token in its name. The CrawlerChecker adds the token to the headers of each request so the Lumen server knows which configuration to use.

Why is the difference so big #

Besides tests that use our Lumen server, the Oh Dear test suite contains a lot of tests that reach out to real world sites to test things. The tests that make sure that our certificate check is working correctly, will reach out to badsll.com, a site that provides examples of broken certificates. Reaching out to this site takes time. Our test suite is mostly constrained by the network, and not by CPU / memory.

Without all tests running sequentially a lot of time was lost just waiting for network requests to finish. With parallel testing other tests are being performed instead of just waiting around.

In closing #

We think parallel testing is a very nice addition to Laravel. This feature was already available for quite some time in Rails. I'm sure that this inspired the Laravel team to add the feature.

Even though people could already use ParaTest directly, having Laravel take care of databases so nicely in parallel test will surely help drive adoption. After the feature was released in Laravel, a lot of happy people tweeted out screenshots of dramatically improved testing times, and we were one of them.

I spent some time cleaning up the test suite of @OhDearApp

— Freek Van der Herten 📯 (@freekmurze) January 26, 2021

Here are the results when using sequential and parallel testing.

Sequential: 04m28s - 320 MB

Parallel: 01m05s - 44.5 MBhttps://t.co/stLO4y3AdI#php #laravel pic.twitter.com/HlYFPkIrb9

To know more about Laravel testing check out the Laravel docs.