Broken links troubleshooting and a couple of extras

Published on April 7, 2025 by Sean White

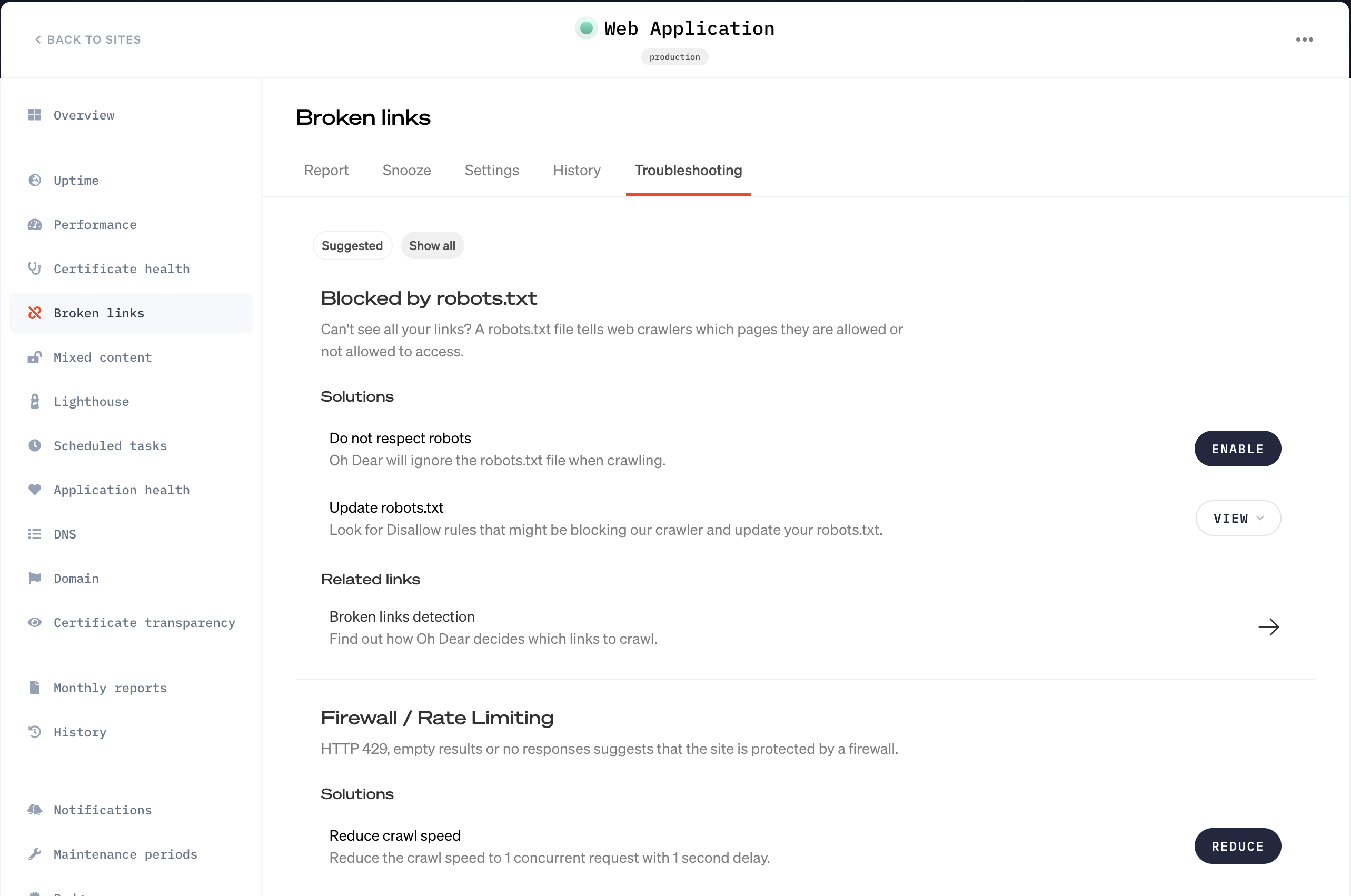

We are excited to announce a powerful new feature in our Broken Links monitor: Troubleshooting.

When we can’t crawl all your links, or you see unexpected gaps in the results, it can be tricky to know where to start. Our new Troubleshooting tab is here to help.

Quickly identify and resolve crawling issues

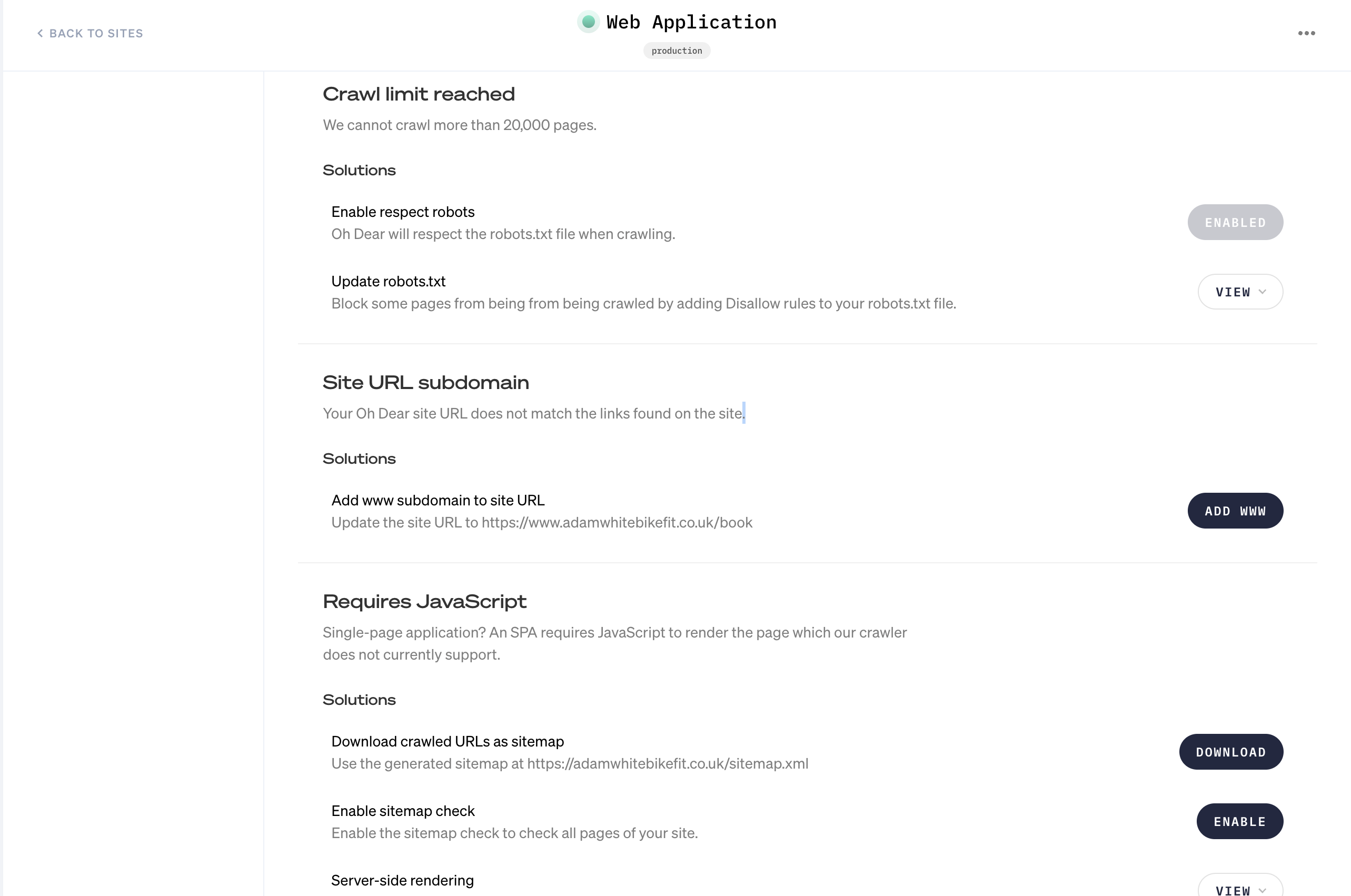

The Troubleshooting tab detects common issues that may affect link checking and gives you clear guidance to resolve them and in some instances allows you to resolve them with one click. Is robots.txtpreventing us from accessing your site? Is your firewall rate-limiting us, or maybe you are linking to www when your site URL is different?

All broken link report pages will now have access to suggested and all troubleshooting filters where we either show you relevant actions based on your results or all possible issues and solutions. Think of it like interactive documentation and FAQs (which we have also linked to). There's even a short API snippet to trigger a new broken links - perfect for post-deployment hooks.

Why it matters

Our goal is to make it easy for teams of all sizes to catch and fix broken links and quickly identify and resolve any issues. With this update, even the trickier edge cases become actionable and transparent. Take a look at what we have got so far - we will be keeping this up-to-date based on feedback so keep an eye out! And if you're still having trouble our support team is just a click away in the support bubble.

New robots.txt controls

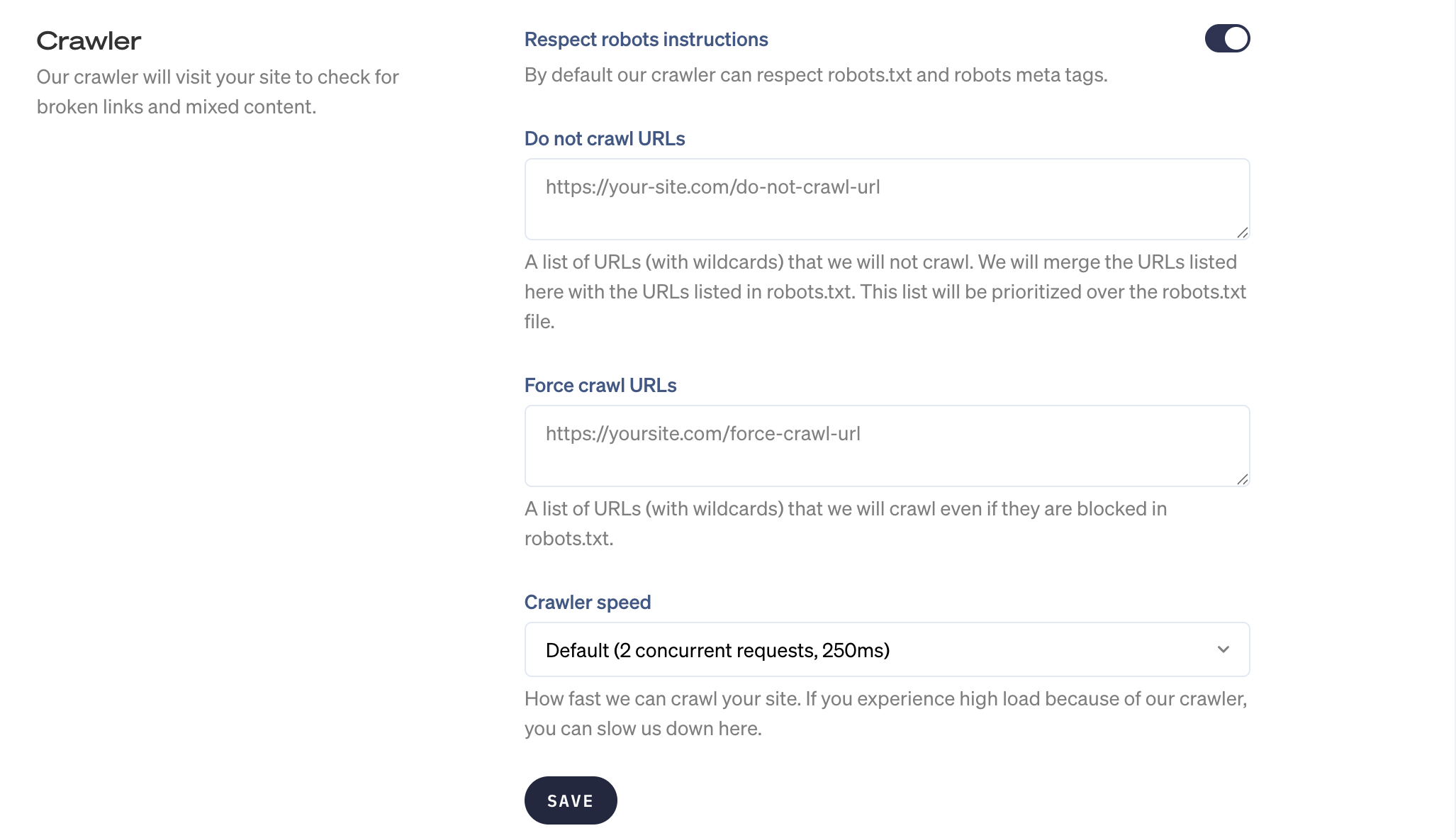

We have always allowed our users to 'ignore' broken links but sometimes you don't even want us to crawl your pages. Especially when you want us to respect your robots.txt file but where you may not have access to server config.

So we have added advanced settings for a couple of edge cases:

Force crawl specific URLs: If you can't or don’t want to modify your robots.txt, you can manually add specific pages to an allowlist that should always be crawled - even if disallowed.

Do not crawl specific URLs: Useful to exclude sensitive pages, admin areas, or known false positives.

These options give you just a little bit more control over how Oh Dear crawls your site.

Coming soon

We have more broken links updates coming to your notification settings soon! Tired of receiving the same alert every day for the same broken links? You will now be able to only get a follow-up notification when new broken links are found. Giving you a bit more time to get them fixed without constantly snoozing the notification.

Keep an eye on the Oh Dear to find out when this is released :)